XML sitemap SEO still matters today, not theory stuff. We see pages not indexed for weeks, crawl budget wasted on filter URLs, and big sites confusing Google every day. This is common. You publish good pages, but bots miss them. Like products stuck in a warehouse with no door.

XML sitemaps for SEO do not rank pages. Accept that first. They control discovery, priority, and crawl signals now. You tell Google what to look at, when, and what changed. Do this right and indexing moves faster. Especially for large blogs, ecommerce, and service pages. Sitemap indexing works like a clean map; bots walk straight instead of guessing paths, as steady as a running machine.

This guide covers real work we do (not textbook).

- Fix non-indexed pages by cleaning sitemap URLs and lastmod use

- Save crawl budget by removing junk and duplicate paths

- Scale indexing for large sites and new sections without chaos

What an XML Sitemap Actually Does (And What It Doesn't)

What is an XML sitemap, really?

Sitemap meaning is simple if you strip the hype. An XML sitemap is a crawl communication file. It lists your important URLs and tells search engines what to look at first. It helps bots find pages faster, especially new or deep ones. It does not push rankings by itself.

Direct definition

An XML sitemap is a structured file that shows search engines which pages exist on your site, when they were updated, and how they relate. It guides crawlers through your site, like a map on a factory floor, not a sales booster.

What XML Sitemaps Help With vs What They Don't

| Help With | Don't Help With |

|---|---|

| Faster discovery of new pages | Instant ranking improvement |

| Better crawl coverage on large sites | Fix bad content |

| Indexing support for weak internal links | Replace internal linking |

| Crawl priority signals | Guarantee indexing |

Now listen. Google documentation is clear. Sitemaps are hints, not commands. We see this daily in agency crawl data. Small sites with clean internal links? Sitemap barely changes anything. Google crawl it fine. Large sites with filters, pagination, or news URLs? Sitemap matters more. It focuses crawler attention and reduces waste.

Do this. Submit the sitemap in Search Console. Keep it clean. Remove redirected and noindex URLs. Update it when the content changes. Think of it like organising tools before work. The work still matters. SEO works slowly but stably. Sitemap just keeps the machine running smoothly (not magic).

Deep Dive into Sitemaps

Discover 5 surprising truths about sitemaps that go beyond the basics.

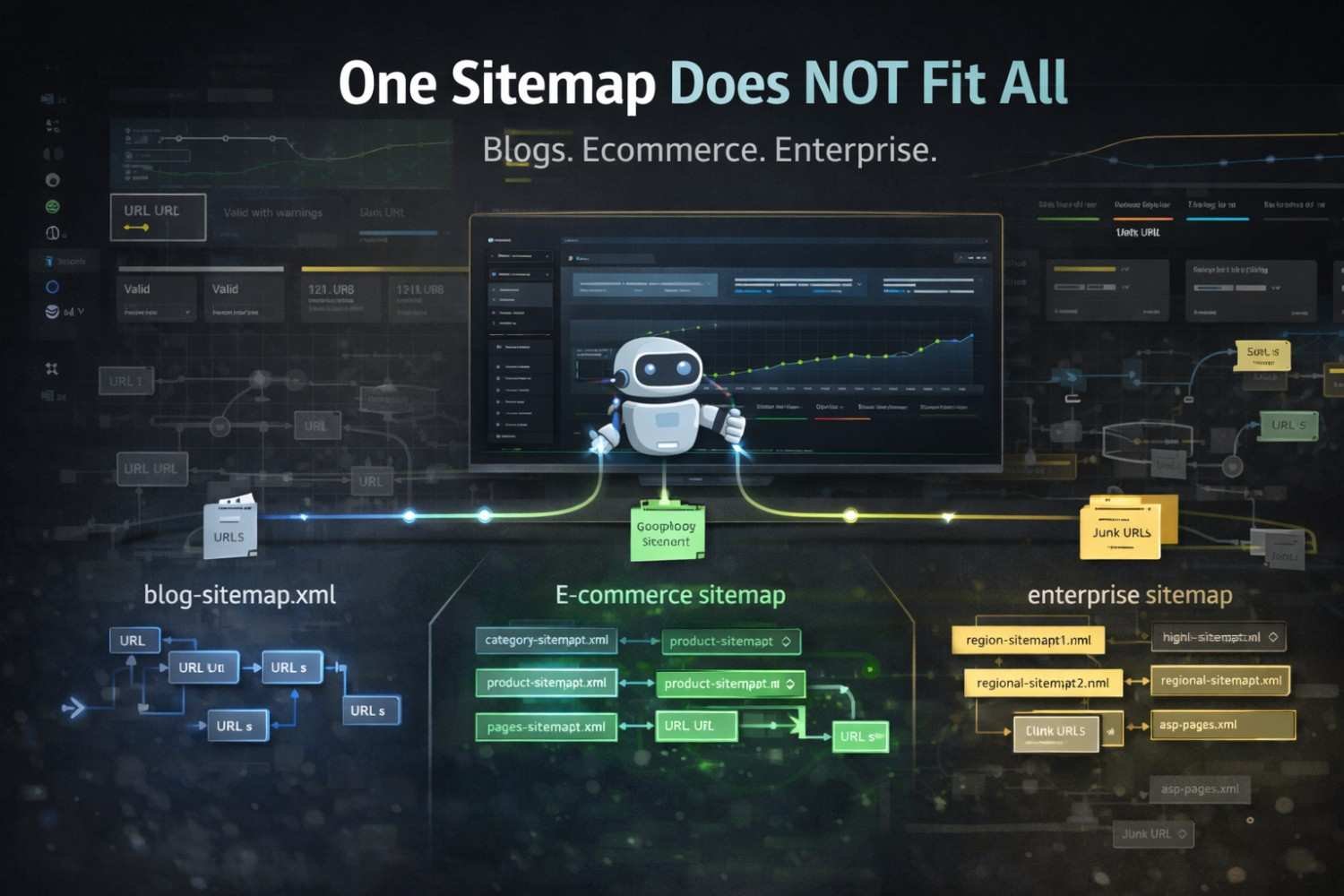

Read the Full StoryHow We Create XML Sitemaps for Different Website Types

When we create an XML sitemap for a website, we do not use one fixed template. Sitemap generation depends on site type, content speed, and how Google crawls it. A blog site needs one logic. An e-commerce site needs another. Do this wrong, and search bots get confused, like giving the wrong map to the delivery guy.

Blogs

Start with clean post URLs. Include only indexed posts and key category pages. Exclude tag pages most times. Update sitemap daily or weekly based on posting speed. A blog is like planting seeds for long-term growth. Too many weak URLs slow down the work. So keep it light and fresh.

Ecommerce

Split sitemaps by product, category, and brand pages. Control parameter URLs. Exclude out-of-stock and filtered pages. Prioritise high-margin products. E-commerce sitemaps should move like a running machine. Steady. Not overloaded. If you sell 5,000 products, do not push all at once. Break it.

Enterprise / Large Sites

Use sitemap index files. Segment by content type and region. Monitor crawl budget monthly. Update only changed URLs. Large sites need discipline. One mistake scales fast. So control every feed carefully.

Our internal sitemap steps

- Audit live URLs first.

- Decide what deserves indexing.

- Create an XML sitemap by type.

- Compress and submit to Search Console.

- Track crawl stats and errors.

- Update rules when the site changes.

Where most sitemap tutorials go wrong

They push auto-generated, bloated sitemaps. Every URL. Every filter. Every junk page. This looks easy, but hurts SEO. A sitemap is not a storage. It is a direction board (tell Google where to go, not everywhere). You should guide bots. Then traffic flows like water when the funnel is clear.

Explore SEO Services

Boost your online presence with professional SEO services in Delhi.

XML Sitemap Format Google Actually Processes Correctly

A valid XML sitemap format is the first thing Google checks before it crawls anything. Use correct sitemap syntax, or your file gets submitted but ignored. This happens a lot. I see it in real client accounts.

Start with the required tags only. Keep it clean.

Required tags

<urlset>with correct namespace<url>for each page<loc>with full URL, not relative<lastmod>in ISO date format

Optional tags (use carefully)

<changefreq>often ignored<priority>mostly ignored<image:image>only if image matter

Do not force optional tags everywhere. Google does not reward it. Many sitemaps look "rich" but fail validation. One wrong namespace and Google skipped the whole file.

I see cases where the sitemap is "Submitted" in the Search Console, but the status shows "Couldn't fetch." The reason is a small syntax error, like an extra space or wrong tag order (yes, that small).

Mini checklist

- Validate the sitemap before uploading.

- Use UTF-8 encoding

- One URL per

<url>only - Keep file under limits.

- Check Search Console. Fix errors. Resubmit.

Now Google processes it like a clear road, not a broken map.

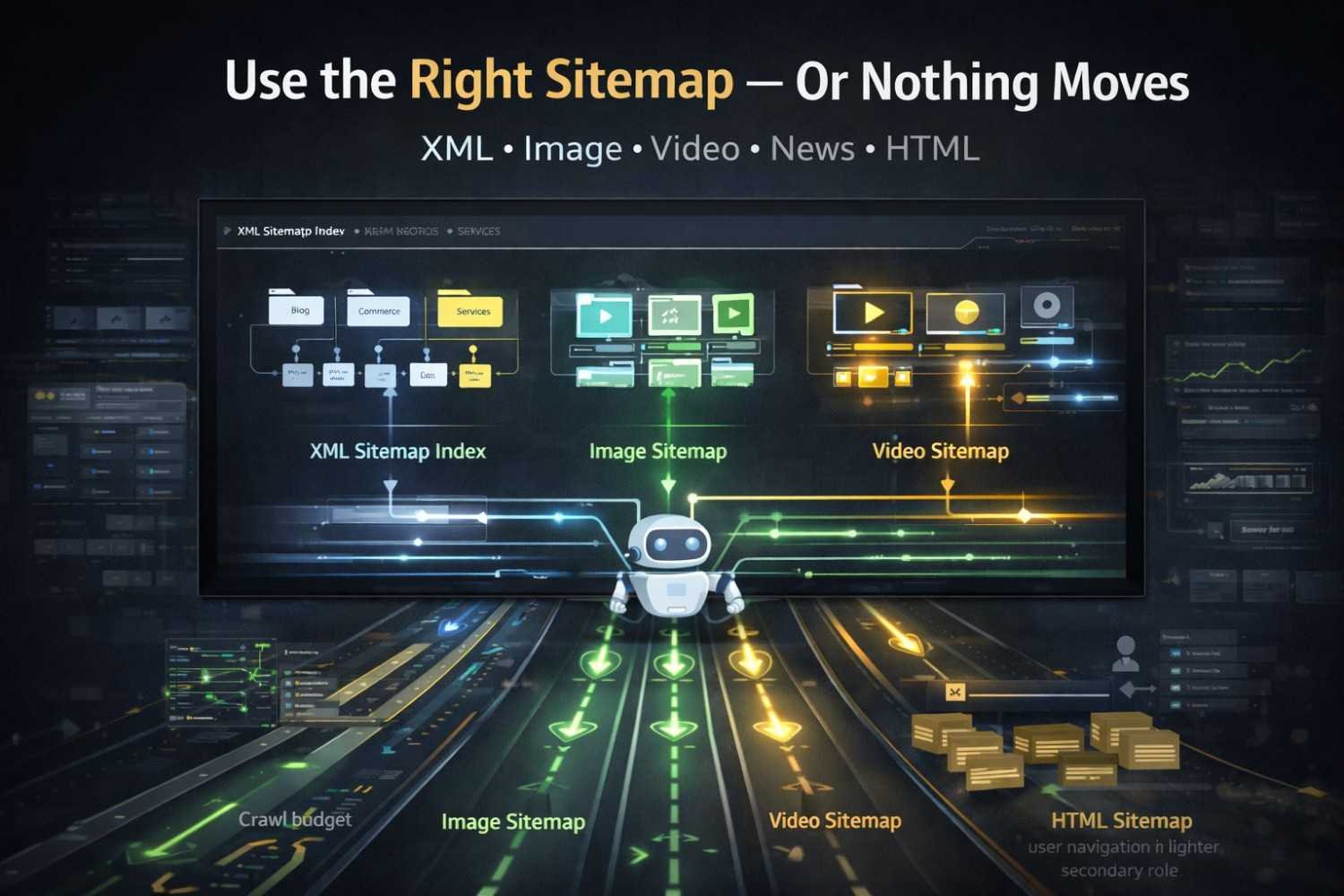

Different Sitemap Types and When We Recommend Using Them

Choosing the right types of sitemaps is not a checkbox task. It is a control system. You guide search engines where to crawl, when to come back, and what really matters. Use the wrong one and nothing breaks, but nothing improves, either. Use the right one, and indexing moves faster, like opening the correct lane on a busy road.

XML Sitemap Index for Large & Growing Websites

Use an XML sitemap index when your site crosses 1,000 URLs or is growing every month. Blogs, ecommerce, service pages. All together become heavy. Split sitemaps by type or category and connect them with an index. Do this when the crawl budget starts wasting on old URLs. Skip this if your site is small and static. One clean sitemap is enough. Extra files here only confuse, like adding too many drawers for a few clothes.

Image Sitemap for Discover & Visual Search

Add an image sitemap when images drive traffic. Travel, real estate, food, ecommerce catalogs. This helps Google see images as assets, not decoration. Do this if your images are original and optimised. Skip it if images are stock or reused. No benefit then. An image sitemap without image SEO is like labelling empty boxes.

Video Sitemap for Indexing Rich Media

Use a video sitemap when the video is core content. Tutorials, product demos, and landing page explainers. It helps with indexing and preview features. Use it even if you already use structured data (they work together). Skip the video sitemap if videos are embedded from YouTube and not key to ranking. Don't overbuild here.

Google News Sitemap for Time-Sensitive Content

Use Google News sitemap only if you publish daily or frequent news-style content. It helps with fast discovery. Publishers need this. Brands and agencies usually don't. Skip it if you post blogs once a week. It brings zero value then (and creates false expectations).

HTML Sitemap (User Experience vs SEO Reality)

HTML sitemap helps users, not rankings. Use it for large navigation-heavy sites. It reduces bounce and helps humans find pages. SEO impact is indirect. From audits, many sites don't need it. Don't add it just because someone said so.

Use sitemaps like tools, not decorations. Pick what moves the needle.

AI Content Strategy

Learn how Notebook LM revolutionizes content creation.

XML Sitemap Optimisation Based on Crawl Data (Not Assumptions)

XML sitemap optimisation works only when you trust crawl data, not guesses. We build sitemap SEO best practices using real Search Console crawl stats, index coverage reports, and server logs. We test, break, and fix. Many times, the sitemap looks clear, but Google crawls it badly. So stop assuming. Read the data. Then act.

We start inside Google Search Console. Open Pages report. Check "Indexed" and "Not indexed." Compare with the sitemap submitted URLs. You will see gaps. We saw cases where 18k URLs were submitted and only 9k indexed. The sitemap was not wrong. The strategy was.

Include Only Index-Worthy URLs

Remove weak URLs from the sitemap. Do it strictly. Thin pages, tag pages, filtered URLs, expired offers. We removed 3,200 thin pages from one e-commerce sitemap. The index rate jumped from 54% to 81% in four weeks. Crawl became cleaner. Google stopped wasting time. Like cleaning stones from rice before cooking, engine work is smoother.

Validate Sitemap Beyond "No Errors"

"No errors" means nothing. Google does not flag soft problems clearly. Check lastmod misuse, redirected URLs, and canonicals not matching. We found URLs in the sitemap pointing to pages with different canonicals. Google crawled but skipped indexing. Fix it. Align the sitemap URL with the final canonical. Then resubmit. Now crawl stabilises.

Use Sitemap Reporting to Diagnose Indexing Problems

Compare sitemap URLs vs indexed URLs. Look for mismatches. If many URLs are "Discovered – currently not indexed," the sitemap is too big or of low quality. We track weekly. Mark changes. Then see the movement. Sitemap reporting becomes a diagnostic tool, not a submission ritual. Like checking the fuel gauge before a long drive, not after the engine stops.

Sitemap Size & URL Segmentation Strategy

Split the sitemap by page type. Blog, product, category, static. We tested this on a large content site. Single sitemap slowed crawl. Split into five. The blog sitemap got crawled faster. Product sitemap stabilised. Index coverage improved in two crawl cycles. Do not overthink. Segment by intent and value (this helps Google prioritise). Sitemap optimisation is about discipline. SEO works slowly but steadily.

XML Sitemap Best Practices We Follow Across Client Projects

XML sitemap best practices matter when you want search engines to crawl your site without confusion. We follow a simple sitemap checklist across client projects, tested on real websites, not theory.

Our XML Sitemap Checklist

- Include only indexable URLs. Remove noindex pages, filters, and test URLs. Keep it clean.

- Update the sitemap automatically when new pages go live. Do not wait. Crawlers move fast.

- Use proper lastmod dates. Change it only when the content actually changes. Fake dates break trust.

- Split large sitemaps. Keep under 50,000 URLs or 50MB. Big files slow crawlers.

- Create separate sitemaps for images and videos if the site is media-heavy. It helps discovery.

- Submit the sitemap to Google Search Console and Bing Webmaster Tools. Then check the status weekly.

- Block thin or duplicate pages before adding to the sitemap. A sitemap is not a dumping box.

- Use HTTPS URLs only. Mixed protocols create crawl waste.

- The sitemap sits at the root level. Example: yoursite.com/sitemap.xml so bots find it fast.

- Validate sitemap format before upload. One error stops full crawling (small mistake but big damage).

This process works like a clear road map. If the map is messy, the driver is slow. Same with bots.

Sitemap strategy changes as the site grows. Small sites need simple files. Big e-commerce needs a sitemap index, image maps, and regular audits. You should revisit the sitemap every few months. Pages increase, structure shifts, old URLs die. SEO works slowly but steadily, like a running machine that never stops if you maintain it.

Key Takeaway: XML sitemaps are not about ranking pages directly. They're about efficient communication with search engines. A clean, well-structured sitemap tells Google exactly what to crawl, when to crawl it, and what's important. This saves crawl budget, speeds up indexing, and creates a foundation for SEO success.

Visit Our Location

Get Directions on Maps